Concurrency Control in Rails

Scenario

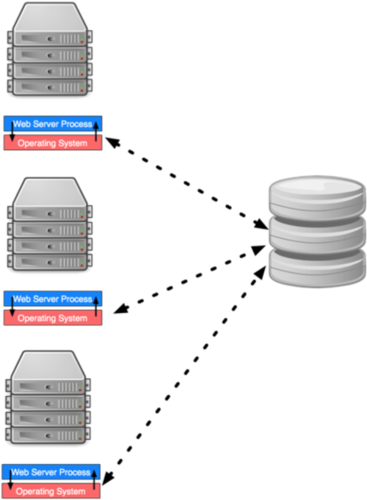

We have many application servers running our application. We are using a relational database to ensure that each request observes a consistent view of the database.

Locks in rails

Rails uses two types of concurrency control

- Optimistic Locking: Assume that database modifications are going to succeed. Throw an exception when they do not.

- Pessimistic Locking: Ensure that database modifications will succeed by explicitly avoiding conflict.

Optimistic Lock

Definition

Any ActiveRecord model will automatically utilize optimistic locking if an integer lock_version field is added to the object’s table. Whenever such an ActiveRecord object is read from the database, that object contains its associated lock_version. When an update for the object occurs, Rails compares the object’s lock_version to the most recent one in the database. If they differ a StaleObjectException is thrown. Otherwise, the data is written to the database, and the lock_version value is incremented. This optimistic locking is an application-level construct. The database does nothing more than storing the lock_version values.

Example

1 | c1 = Person.find(1) |

Strengths

- Predictable performance

- Lightweight

Weaknesses

- Have to write error handling code

- (or) errors will propagate to your users

Pessimistic Lock

Definition

Easily done by calling lock along with ActiveRecord find. Whenever an ActiveRecord object is read from the database with that option an exclusive lock is acquired for the object. While this lock is held, the database prevents others from obtaining the lock, reading from, and writing to the object. The others are blocked until the object is unlocked. Implemented using the SELECT FOR UPDATE SQL.

Example

Passing

Request 1

1 | transaction do |

Request 2

1 | transaction do |

Blocking

Request 1

1 | transaction do |

Request 2

1 | transaction do |

Issue: Deadlock

Request 1

1 | transaction do |

Request 2

1 | transaction do |

Strengths

- Failed transactions are incredibly rare or nonexistent

Weaknesses

- Have to worry about deadlocks and avoiding them

- Performance is less predictable

DB Bottlenecks

By changing the way you interact with the Rails ORM (ActiveRecord) you can significantly improve performance.

Development Mode in Rails

In development mode, Rails will output the SQL it generates and executes to the application server log.

To (temporarily) enable debugging in production mode, change config/environments/production.rb to contain:

1 | config.log_level = :debug |

Investigation

1 | Processing by SubmissionsController#index as HTML |

That is a lot of

SELECTqueries!

We are issuing a ton of SELECT queries. The overhead associated with each is slowing us down.

Issue fewer queries.

Methodology

- Do not ask for the community each time

- Do not ask for the number of comments each time

Optimization: Reducing (N+1) Queries in Rails

(Before) Without includes

1 | class SubmissionsController < ApplicationController |

(After) With includes

1 | class SubmissionsController < ApplicationController |

Result

1 | Result: ActiveRecord 39.6ms |

https://guides.rubyonrails.org/active_record_querying.html#includes

With

includes, Active Record ensures that all of the specified associations are loaded using the minimum possible number of queries.Revisiting the above case using the

includesmethod, we could rewriteBook.limit(10)to eager load authors:

2

3

4

5

books.each do |book|

puts book.author.last_name

endThe above code will execute just 2 queries, as opposed to the 11 queries from the original case:

2

3

SELECT authors.* FROM authors

WHERE authors.book_id IN (1,2,3,4,5,6,7,8,9,10)

Further optimization: SQL Explanation

Sometimes things are still slow even when the number of queries is minimized. SQL provides an EXPLAIN statement that can be used to analyze individual queries.

When a query starts with EXPLAIN…

- the query is not actually executed

- the produced output will help us identify potential improvements

- e.g. sequential vs index scan, startup vs total cost

Optimizations

Three primary ways to optimize SQL queries:

- Add or modify indexes

- Modify the table structure

- Directly optimize the query

SQL Indexes

An index is a fast, ordered, compact structure (often B-tree) for identifying row locations. When an index is provided on a column that is to be filtered (searching for a particular item), the database is able to quickly find that information. Indexes can exist on a single column, or across multiple columns. Multi-column indexes are useful when filtering on two columns (e.g., CS classes that are not full).

To add an index on the name field of the Product table, create a migration containing:

1 | class AddNameIndexProducts < ActiveRecord::Migration |

Foreign Keys

By default, when dealing with relationships between ActiveRecord objects, Rails will validate the constraints in the application layer. For example, an Employee object should have a Company that they work for. Assuming the relationship is defined properly, Rails will enforce that when creating an Employee, the associated Company exists. Many databases, have built-in support for enforcing such constraints. With rails, one can also take advantage of the database’s foreign key support via add_foreign_key.

1 | class AddForeignKeyToOrders < ActiveRecord::Migration |

Optimize the Table Structure

Indexes work best when they can be kept in memory. Sometimes changing the field type, or index length can provide significant memory savings.

If appropriate some options are:

- Reduce the length of a VARCHAR index if appropriate

- Use a smaller unsigned integer type

- Use an integer or enum field for statuses rather than a text-based value

Directly Optimize the Query

Before

1 | explain select count(*) from txns where parent_id - 1600 = 16340; |

After

1 | explain select count(*) from txns where parent_id = 16340 + 1600 |